gVisor is a multi-layered security sandbox. seccomp-bpf is

gVisor’s second layer of defense against container escape attacks. gVisor uses

seccomp-bpf to filter its own syscalls by the host kernel. This significantly

reduces the attack surface to the host that a compromised gVisor process can

access. However, this layer comes at a cost: every legitimate system call that

gVisor makes must be evaluated against this filter by the host kernel before it

is actually executed. This blog post contains more than you ever wanted to

know about seccomp-bpf, and explores the past few months of work to optimize

gVisor’s use of it.

A diagram showing gVisor’s two main layers of

security: gVisor itself, and

A diagram showing gVisor’s two main layers of

security: gVisor itself, and seccomp-bpf. This blog post touches on the

seccomp-bpf part.

Tux logo by Larry Ewing and The GIMP.

Understanding seccomp-bpf performance in gVisor

One challenge with gVisor performance improvement ideas is that it is often very difficult to estimate how much they will impact performance without first doing most of the work necessary to actually implement them. Profiling tools help with knowing where to look, but going from there to numbers is difficult.

seccomp-bpf is one area which is actually much more straightforward to

estimate. Because it is a secondary layer of defense that lives outside of

gVisor, and it is merely a filter, we can simply yank it out of gVisor and

benchmark the performance we get. While running gVisor in this way is strictly

less secure and not a mode that gVisor should support, the numbers we get in

this manner do provide an upper bound on the maximum potential performance

gains we could see from optimizations within gVisor’s use of seccomp-bpf.

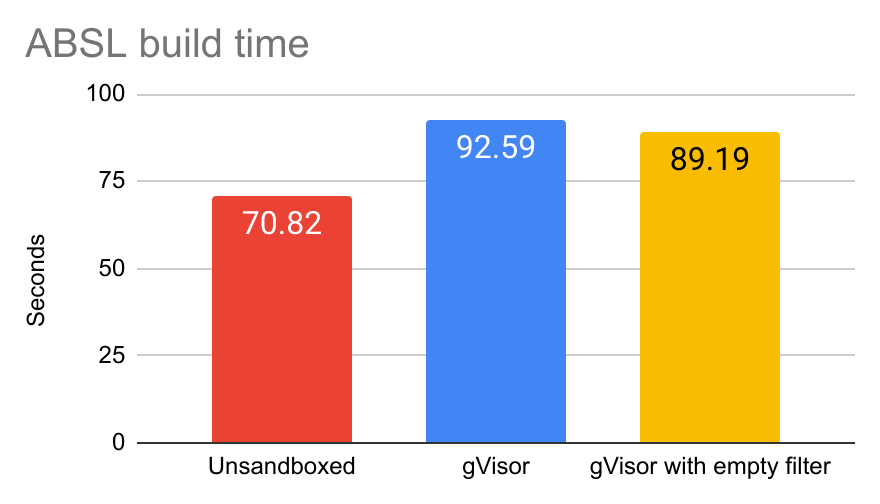

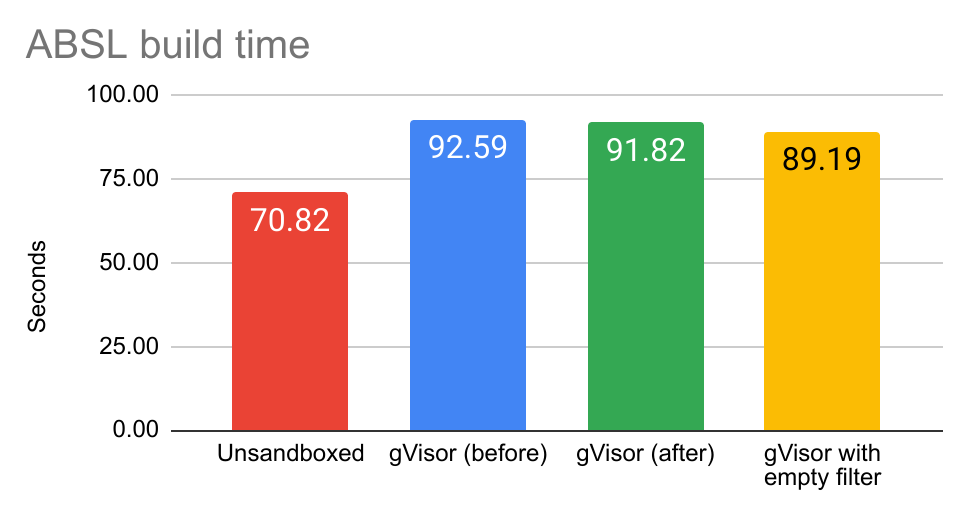

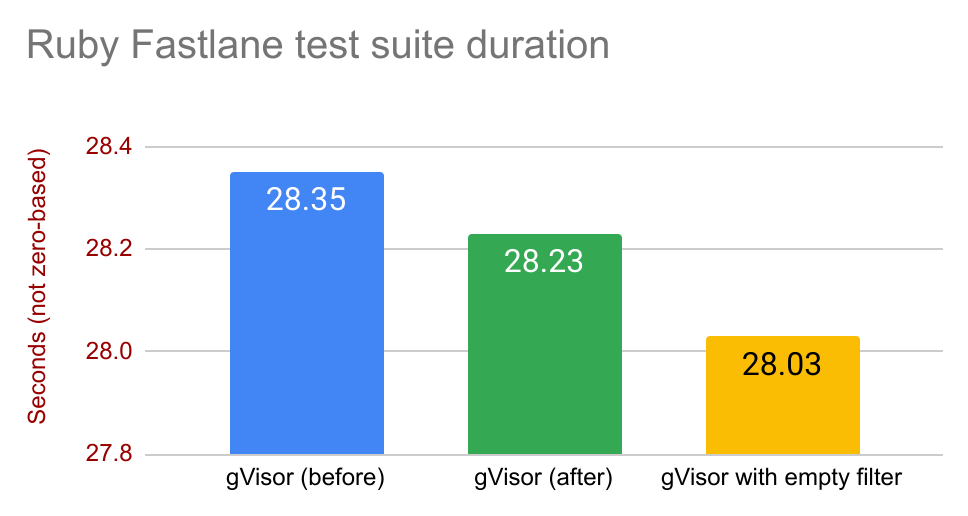

To visualize this, we can run a benchmark with the following variants:

- Unsandboxed: Unsandboxed performance without gVisor.

- gVisor: gVisor from before any of the performance improvements described later in this post.

- gVisor with empty filter: Same as gVisor, but with the

seccomp-bpffilter replaced with one that unconditionally approves every system call.

From these three variants, we can break down the gVisor overhead that comes from

gVisor itself vs the one that comes from seccomp-bpf filtering. The difference

between gVisor and unsandboxed represents the total gVisor performance

overhead, and the difference between gVisor and gVisor with empty filter

represents the performance overhead of gVisor’s seccomp-bpf filtering rules.

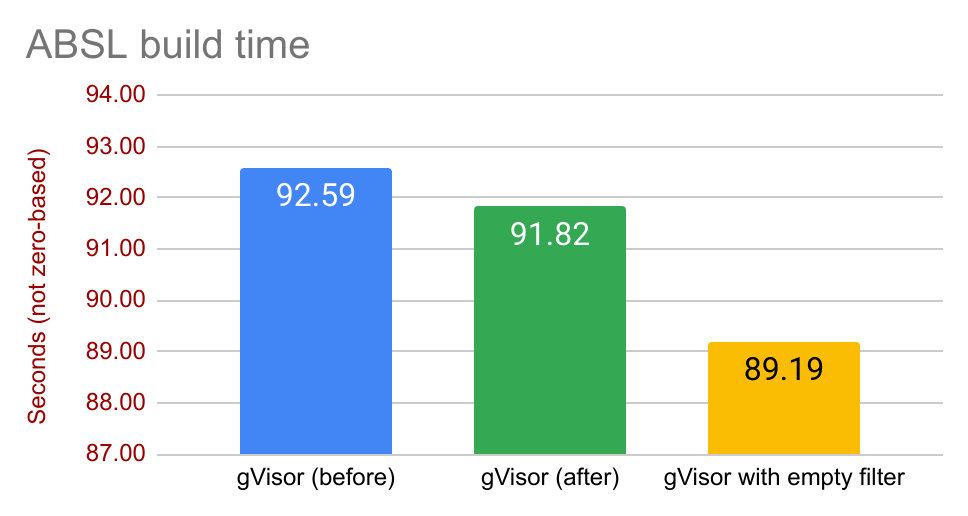

Let’s run these numbers for the ABSL build benchmark:

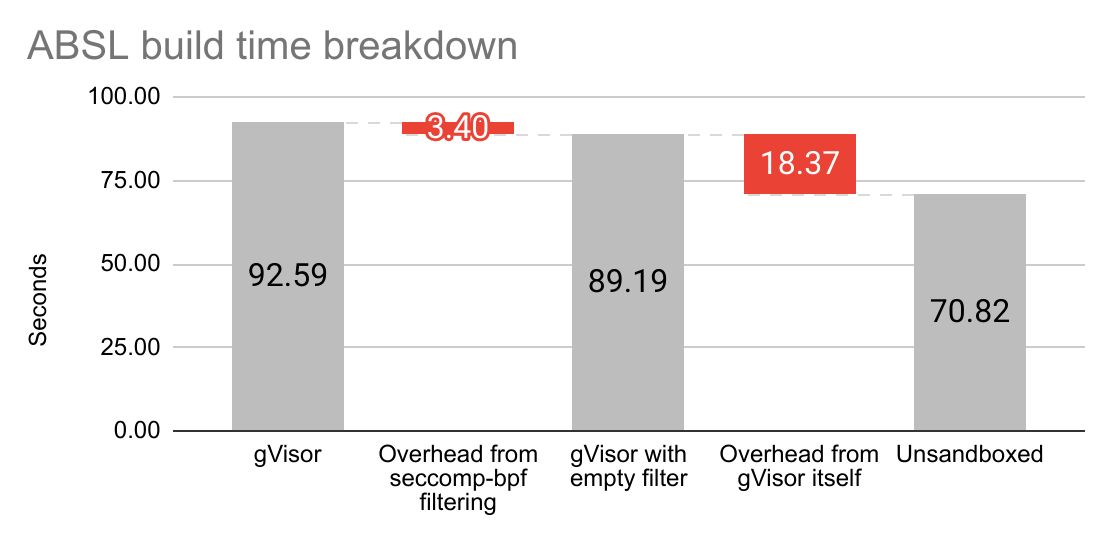

We can now use these numbers to give a rough breakdown of where the overhead is coming from:

The seccomp-bpf overhead is small in absolute terms. The numbers suggest that

the best that can be shaved off by optimizing seccomp-bpf filters is up to

3.4 seconds off from the total ABSL build time, which represents a reduction of

total runtime by ~3.6%. However, when looking at this amount relative to

gVisor’s overhead over unsandboxed time, this means that optimizing the

seccomp-bpf filters may remove up to ~15% of gVisor overhead, which is

significant. (Not all benchmarks have this behavior; some benchmarks show

smaller seccomp-bpf-related overhead. The overhead is also highly

platform-dependent.)

Of course, this level of performance is what was reached with empty

seccomp-bpf filtering rules, so we cannot hope to reach this level of

performance gains. However, it is still useful as an upper bound. Let’s see how

much of it we can recoup without compromising security.

A primer on BPF and seccomp-bpf

BPF, cBPF, eBPF, oh my!

BPF (Berkeley Packet Filter) is a virtual machine and eponymous machine

language. Its name comes from its original purpose: filtering packets in a

kernel network stack. However, its use has expanded to other domains of the

kernel where programmability is desirable. Syscall filtering in the context of

seccomp is one such area.

BPF itself comes in two dialects: “Classic BPF” (sometimes stylized as cBPF),

and the now-more-well-known “Extended BPF” (commonly known as eBPF).

eBPF is a superset of cBPF and is usable extensively throughout the kernel.

However, seccomp is not one such area. While

the topic has been heavily debated, the

status quo remains that seccomp filters may only use cBPF, so this post will

focus on cBPF alone.

So what is seccomp-bpf exactly?

seccomp-bpf is a part of the Linux kernel which allows a program to impose

syscall filters on itself. A seccomp-bpf filter is a cBPF program that is

given syscall data as input, and outputs an “action” (a 32-bit integer) to do as

a result of this system call: allow it, reject it, crash the program, trap

execution, etc. The kernel evaluates the cBPF program on every system call the

application makes. The “input” of this cBPF program is the byte layout of the

seccomp_data struct, which can be loaded into the registers of the cBPF

virtual machine for analysis.

Here’s what the seccomp_data struct looks like in

Linux’s include/uapi/linux/seccomp.h:

struct seccomp_data {

int nr; // 32 bits

__u32 arch; // 32 bits

__u64 instruction_pointer; // 64 bits

__u64 args[6]; // 64 bits × 6

}; // Total 512 bits

Sample seccomp-bpf filter

Here is an example seccomp-bpf filter, adapted from the

Linux kernel documentation1:

00: load32 4 // Load 32 bits at offsetof(struct seccomp_data, arch) (= 4)

// of the seccomp_data input struct into register A.

01: jeq 0xc000003e, 0, 11 // If A == AUDIT_ARCH_X86_64, jump by 0 instructions [to 02]

// else jump by 11 instructions [to 13].

02: load32 0 // Load 32 bits at offsetof(struct seccomp_data, nr) (= 0)

// of the seccomp_data input struct into register A.

03: jeq 15, 10, 0 // If A == __NR_rt_sigreturn, jump by 10 instructions [to 14]

// else jump by 0 instructions [to 04].

04: jeq 231, 9, 0 // If A == __NR_exit_group, jump by 9 instructions [to 14]

// else jump by 0 instructions [to 05].

05: jeq 60, 8, 0 // If A == __NR_exit, jump by 8 instructions [to 14]

// else jump by 0 instructions [to 06].

06: jeq 0, 7, 0 // Same thing for __NR_read.

07: jeq 1, 6, 0 // Same thing for __NR_write.

08: jeq 5, 5, 0 // Same thing for __NR_fstat.

09: jeq 9, 4, 0 // Same thing for __NR_mmap.

10: jeq 14, 3, 0 // Same thing for __NR_rt_sigprocmask.

11: jeq 13, 2, 0 // Same thing for __NR_rt_sigaction.

12: jeq 35, 1, 0 // If A == __NR_nanosleep, jump by 1 instruction [to 14]

// else jump by 0 instructions [to 13].

13: return 0 // Return SECCOMP_RET_KILL_THREAD

14: return 0x7fff0000 // Return SECCOMP_RET_ALLOW

This filter effectively allows only the following syscalls: rt_sigreturn,

exit_group, exit, read, write, fstat, mmap, rt_sigprocmask,

rt_sigaction, and nanosleep. All other syscalls result in the calling thread

being killed.

seccomp-bpf and cBPF limitations

cBPF is quite limited as a language. The following limitations all factor into the optimizations described in this blog post:

- The cBPF virtual machine only has 2 32-bit registers, and a tertiary

pseudo-register for a 32-bit immediate value. (Note that syscall arguments

evaluated in the context of

seccompare 64-bit values, so you can already foresee that this leads to complications.) seccomp-bpfprograms are limited to 4,096 instructions.- Jump instructions can only go forward (this ensures that programs must halt).

- Jump instructions may only jump by a fixed (“immediate”) number of instructions. (You cannot say: “jump by whatever this register says”.)

- Jump instructions come in two flavors:

- “Unconditional” jump instructions, which jump by a fixed number of instructions. This number must fit in 16 bits.

- “Conditional” jump instructions, which include a condition expression

and two jump targets:

- The number of instructions to jump by if the condition is true. This number must fit in 8 bits, so this cannot jump by more than 255 instructions.

- The number of instructions to jump by if the condition is false. This number must fit in 8 bits, so this cannot jump by more than 255 instructions.

seccomp-bpf caching in Linux

Since

Linux kernel version 5.11,

when a program uploads a seccomp-bpf filter into the kernel,

Linux runs a BPF emulator

that looks for system call numbers where the BPF program doesn’t do any fancy

operations nor load any bits from the instruction_pointer or args fields of

the seccomp_data input struct, and still returns “allow”. When this is the

case, Linux will cache this information in a per-syscall-number bitfield.

Later, when a cacheable syscall number is executed, the BPF program is not evaluated at all; since the kernel knows that the program is deterministic and doesn’t depend on the syscall arguments, it can safely allow the syscall without actually running the BPF program.

This post uses the term “cacheable” to refer to syscalls that match this criteria.

How gVisor builds its seccomp-bpf filter

gVisor imposes a seccomp-bpf filter on itself as part of Sentry start-up. This

process works as follows:

- gVisor gathers bits of configuration that are relevant to the construction

of its

seccomp-bpffilter. This includes which platform is in use, whether certain features that require looser filtering are enabled (e.g. host networking, profiling, GPU proxying, etc.), and certain file descriptors (FDs) which may be checked against syscall arguments that pass in FDs. -

gVisor generates a sequence of rulesets from this configuration. A ruleset is a mapping from syscall number to a predicate that must be true for this system call, along with an “action” (return code) that is taken should this predicate be satisfied. For ease of human understanding, the predicate is often written as a disjunctive rule, for which each sub-rule is a conjunctive rule that verifies each syscall argument. In other words,

(fA(args[0]) && fB(args[1]) && ...) || (fC(args[0]) && fD(args[1]) && ...) || .... This is represented in gVisor code as follows:Or{ // Disjunction rule PerArg{ // Conjunction rule over each syscall argument fA, // Predicate for `seccomp_data.args[0]` fB, // Predicate for `seccomp_data.args[1]` // ... More predicates can go here (up to 6 arguments per syscall) }, PerArg{ // Conjunction rule over each syscall argument fC, // Predicate for `seccomp_data.args[0]` fD, // Predicate for `seccomp_data.args[1]` // ... More predicates can go here (up to 6 arguments per syscall) }, } -

gVisor performs several optimizations on this data structure.

-

gVisor then renders this list of rulesets into a linear program that looks close to the final machine language, other than jump offsets which are initially represented as symbolic named labels during the rendering process.

-

gVisor then resolves all the labels to their actual instruction index, and computes the actual jump targets of all jump instructions to obtain valid cBPF machine code.

-

gVisor runs further optimizations on this cBPF bytecode.

- Finally, the cBPF bytecode is uploaded into the host kernel and the

seccomp-bpffilter becomes effective.

Optimizing the seccomp-bpf filter to be more efficient allows the program to

be more compact (i.e. it’s possible to pack more complex filters in the 4,096

instruction limit), and to run faster. While seccomp-bpf evaluation is

measured in nanoseconds, the impact of any optimization is magnified here,

because host syscalls are an important part of the synchronous “syscall hot

path” that must execute as part of handling certain performance-sensitive

syscall from the sandboxed application. The relationship is not 1-to-1: a single

application syscall may result in several host syscalls, especially due to

futex(2) which the Sentry calls many times to synchronize its own operations.

Therefore, shaving a nanosecond here and there results in several shaved

nanoseconds in the syscall hot path.

Structural optimizations

The first optimization done for gVisor’s seccomp-bpf was to turn its linear

search over syscall numbers into a

binary search tree. This

turns the search for syscall numbers from O(n) to O(log n) instructions.

This is a very common seccomp-bpf optimization technique which is replicated

in other projects such as

libseccomp and Chromium.

To do this, a cBPF program basically loads the 32-bit nr (syscall number)

field of the seccomp_data struct, and does a binary tree traversal of the

syscall number space.

When it finds a match, it jumps to a set of instructions that check that

syscall’s arguments for validity, and then returns allow/reject.

But why stop here? Let’s go further.

The problem with the binary search tree approach is that it treats all syscall numbers equally. This is a problem for three reasons:

- It does not matter to have good performance for disallowed syscalls, because such syscalls should never happen during normal program execution.

- It does not matter to have good performance for syscalls which can be cached by the kernel, because the BPF program will only have to run once for these system calls.

- For the system calls which are allowed but are not cacheable by the kernel, there is a Pareto distribution of their relative frequency. To exploit this we should evaluate the most-often used syscalls faster than the least-often used ones. The binary tree structure does not exploit this distribution, and instead treats all syscalls equally.

So gVisor splits syscall numbers into four sets:

- 🅰: Non-cacheable 🅰llowed, called very frequently.

- 🅱: Non-cacheable allowed, called once in a 🅱lue moon.

- 🅲: 🅲acheable allowed (whether called frequently or not).

- 🅳: 🅳isallowed (which, by definition, is neither cacheable nor expected to ever be called).

Then, the cBPF program is structured in the following layout:

- Linear search over allowed frequently-called non-cacheable syscalls (🅰).

These syscalls are ordered in most-frequently-called first (e.g.

futex(2)is the first one as it is by far the most-frequently-called system call). - Binary search over allowed infrequently-called non-cacheable syscalls (🅱).

- Binary search over allowed cacheable syscalls (🅲).

- Reject anything else (🅳).

This structure takes full advantage of the kernel caching functionality, and of the Pareto distribution of syscalls.

Binary search tree optimizations

Beyond classifying syscalls to see which binary search tree they should be a

part of, gVisor also optimizes the binary search process itself.

Each syscall number is a node in the tree. When traversing the tree, there are three options at each point:

- The syscall number is an exact match

- The syscall number is lower than the node’s value

- The syscall number is higher than the node’s value

In order to render the BST as cBPF bytecode, gVisor used to render the following (in pseudocode):

if syscall number == current node value

jump @rules_for_this_syscall

if syscall number < current node value

jump @left_node

jump @right_node

@rules_for_this_syscall:

// Render bytecode for this syscall's filters here...

@left_node:

// Recursively render the bytecode for the left node value here...

@right_node:

// Recursively render the bytecode for the right node value here...

Keep in mind the cBPF limitations here. Because conditional

jumps are limited to 255 instructions, the jump to @left_node can be further

than 255 instructions away (especially for syscalls with complex filtering rules

like ioctl(2)). The jump

to @right_node is almost certainly more than 255 instructions away. This means

in actual cBPF bytecode, we would often need to use conditional jumps followed

by unconditional jumps in order to jump so far forward. Meanwhile, the jump to

@rules_for_this_syscall would be a very short hop away, but this locality

would only be taken advantage of for a single node of the entire tree for each

traversal.

Consider this structure instead:

// Traversal code:

if syscall number < current node value

jump @left_node

if syscall_number > current node value

jump @right_node

jump @rules_for_this_syscall

@left_node:

// Recursively render only the traversal code for the left node here

@right_node:

// Recursively render only the traversal code for the right node here

// Filtering code:

@rules_for_this_syscall:

// Render bytecode for this syscall's filters here

// Recursively render only the filtering code for the left node here

// Recursively render only the filtering code for the right node here

This effectively separates the per-syscall rules from the traversal of the BST. This ensures that the traversal can be done entirely using conditional jumps, and that for any given execution of the cBPF program, there will be at most one unconditional jump to the syscall-specific rules.

This structure is further improvable by taking advantage of the fact that syscall numbers are a dense space, and so are syscall filter rules. This means we can often avoid needless comparisons. For example, given the following tree:

22

/ \

9 24

/ / \

8 23 50

Notice that the tree contains 22, 23, and 24. This means that if we get to

node 23, we do not need to check for syscall number equality, because we’ve

already established from the traversal that the syscall number must be 23.

cBPF bytecode optimizations

gVisor now implements a bytecode-level cBPF optimizer running a few lossless optimizations. These optimizations are run repeatedly until the bytecode no longer changes. This is because each type of optimization tends to feed on the fruits of the others, as we’ll see below.

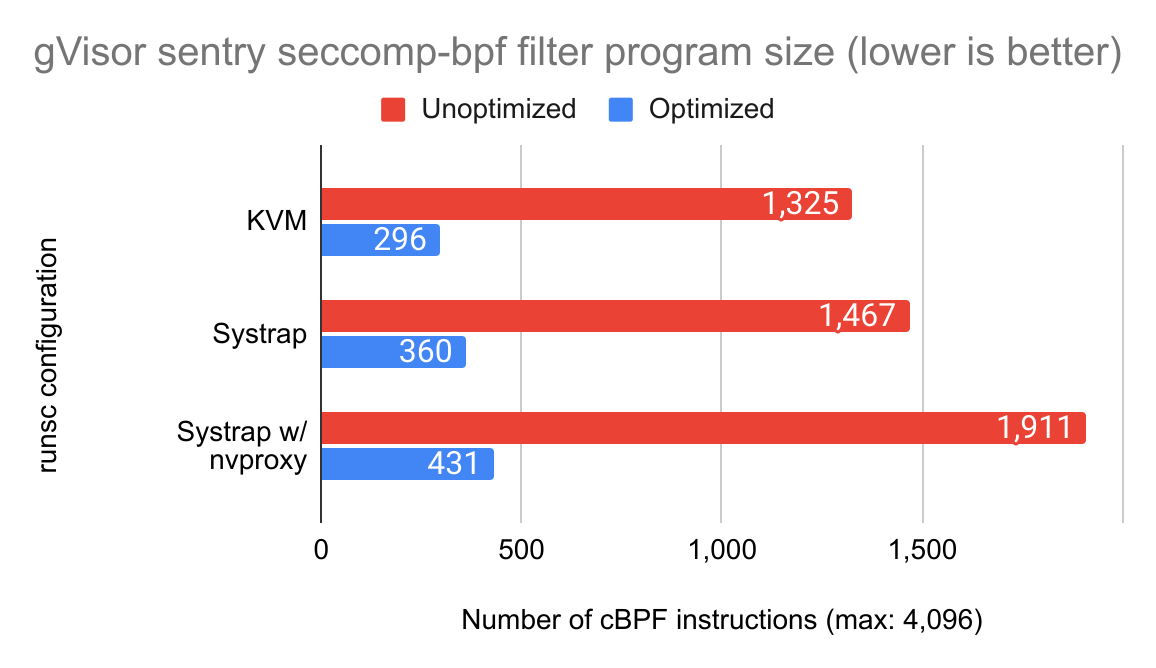

gVisor’s seccomp-bpf program size is reduced by over a factor of 4 using the

optimizations below.

Optimizing cBPF jumps

The limitations of cBPF jump instructions described earlier

means that typical BPF bytecode rendering code will usually favor unconditional

jumps even when they are not necessary. However, they can be optimized after the

fact.

Typical BPF bytecode rendering code for a simple condition is usually rendered as follows:

jif <condition>, 0, 1 // If <condition> is true, continue,

// otherwise skip over 1 instruction.

jmp @condition_was_true // Unconditional jump to label @condition_was_true.

jmp @condition_was_false // Unconditional jump to label @condition_was_false.

… or as follows:

jif <condition>, 1, 0 // If <condition> is true, jump by 1 instruction,

// otherwise continue.

jmp @condition_was_false // Unconditional jump to label @condition_was_false.

// Flow through here if the condition was true.

… In other words, the generated code always uses unconditional jumps, and

conditional jump offsets are always either 0 or 1 instructions forward. This is

because conditional jumps are limited to 8 bits (255 instructions), and it is

not always possible at BPF bytecode rendering time to know ahead of time that

the jump targets (@condition_was_true, @condition_was_false) will resolve to

an instruction that is close enough ahead that the offset would fit in 8 bits.

The safe thing to do is to always use an unconditional jump. Since unconditional

jump targets have 16 bits to play with, and seccomp-bpf programs are limited

to 4,096 instructions, it is always possible to encode a jump using an

unconditional jump instruction.

But of course, the jump target often does fit in 8 bits. So gVisor looks over the bytecode for optimization opportunities:

- Conditional jumps that jump to unconditional jumps are rewritten to their final destination, so long as this fits within the 255-instruction conditional jump limit.

- Unconditional jumps that jump to other unconditional jumps are rewritten to their final destination.

- Conditional jumps where both branches jump to the same instruction are replaced by an unconditional jump to that instruction.

- Unconditional jumps with a zero-instruction jump target are removed.

The aim of these optimizations is to clean up after needless indirection that is a byproduct of cBPF bytecode rendering code. Once they all have run, all jumps are as tight as they can be.

Removing dead code

Because cBPF is a very restricted language, it is possible to determine with

certainty that some instructions can never be reached.

In cBPF, each instruction either:

- Flows forward (e.g.

loadoperations, math operations). - Jumps by a fixed (immediate) number of instructions.

- Stops the execution immediately (

returninstructions).

Therefore, gVisor runs a simple program traversal algorithm. It creates a bitfield with one bit per instruction, then traverses the program and all its possible branches. Then, all instructions that were never traversed are removed from the program, and all jump targets are updated to account for these removals.

In turn, this makes the program shorter, which makes more jump optimizations possible.

Removing redundant load instructions

cBPF programs filter system calls by inspecting their arguments. To do these

comparisons, this data must first be loaded into the cBPF VM registers. These

load operations can be optimized.

load instructionscBPF’s conditional operations (e.g. “is equal to”, “is greater than”, etc.)

operate on a single 32-bit register called “A”. As such, a seccomp-bpf program

typically consists of many load operations (load32) that loads a 32-bit value

from a given offset of the seccomp_data struct into register A, then performs

a comparative operation on it to see if it matches the filter.

00: load32 <offset>

01: jif <condition1>, @condition1_was_true, @condition1_was_false

02: load32 <offset>

03: jif <condition2>, @condition2_was_true, @condition2_was_false

// ...

But when a syscall rule is of the form “this syscall argument must be one of the following values”, we don’t need to reload the same value (from the same offset) multiple times. So gVisor looks for redundant loads like this, and removes them.

00: load32 <offset>

01: jif <condition1>, @condition1_was_true, @condition1_was_false

02: jif <condition2>, @condition2_was_true, @condition2_was_false

// ...

Note that syscall arguments are 64-bit values, whereas the A register is

only 32-bits wide. Therefore, asserting that a syscall argument matches a

predicate usually involves at least 2 load32 operations on different offsets,

thereby making this optimization useless for the “this syscall argument must be

one of the following values” case. We’ll get back to that.

Minimizing the number of return instructions

A typical syscall filter program consists of many predicates which return either

“allowed” or “rejected”. These are encoded in the bytecode as either return

instructions, or jumps to return instructions. These instructions can show up

dozens or hundreds of times in the cBPF bytecode in quick succession, presenting

an optimization opportunity.

return instructionsreturn

instructions, or jumps to return instructions. These instructions can show up

dozens or hundreds of times in the cBPF bytecode in quick succession, presenting

an optimization opportunity.Since two return instructions with the same immediate return code are exactly

equivalent to one another, it is possible to rewrite jumps to all return

instructions that return “allowed” to go to a single return instruction that

returns this code, and similar for “rejected”, so long as the jump offsets fit

within the limits of conditional jumps (255 instructions). In turn, this makes

the program shorter, and therefore makes more jump optimizations possible.

To implement this optimization, gVisor first replaces all unconditional jump

instructions that go to return statements with a copy of that return

statement. This removes needless indirection.

Original bytecode New bytecode

00: jeq 0, 0, 1 00: jeq 0, 0, 1

01: jmp @good --> 01: return allowed

02: jmp @bad --> 02: return rejected

... ...

10: jge 0, 0, 1 10: jge 0, 0, 1

11: jmp @good --> 11: return allowed

12: jmp @bad --> 12: return rejected

... ...

100 [@good]: return allowed 100 [@good]: return allowed

101 [@bad]: return rejected 101 [@bad]: return rejected

gVisor then searches for return statements which can be entirely removed by

seeing if it is possible to rewrite the rest of the program to jump or flow

through to an equivalent return statement (without making the program longer

in the process). In the above example:

Original bytecode New bytecode

00: jeq 0, 0, 1 --> 00: jeq 0, 99, 100 // Targets updated

01: return allowed 01: return allowed // Now dead code

02: return reject 02: return rejected // Now dead code

... ...

10: jge 0, 0, 1 --> 10: jge 0, 89, 90 // Targets updated

11: jmp @good 11: return allowed // Now dead code

12: jmp @bad 12: return rejected // Now dead code

... ...

100 [@good]: return allowed 100 [@good]: return allowed

101 [@bad]: return rejected 101 [@bad]: return rejected

Finally, the dead code removal pass cleans up the dead return statements and

the program becomes shorter.

Original bytecode New bytecode

00: jeq 0, 99, 100 --> 00: jeq 0, 95, 96 // Targets updated

01: return allowed --> /* Removed */

02: return reject --> /* Removed */

... ...

10: jge 0, 89, 90 --> 08: jge 0, 87, 88 // Targets updated

11: return allowed --> /* Removed */

12: return rejected --> /* Removed */

... ...

100 [@good]: return allowed 96 [@good]: return allowed

101 [@bad]: return rejected 97 [@bad]: return rejected

While this search is expensive to perform, in a program full of predicates —

which is exactly what seccomp-bpf programs are — this approach massively

reduces program size.

Ruleset optimizations

Bytecode-level optimizations are cool, but why stop here? gVisor now also

performs

seccomp ruleset optimizations.

In gVisor, a seccomp RuleSet is a mapping from syscall number to a logical

expression named SyscallRule, along with a seccomp-bpf action (e.g. “allow”)

if a syscall with a given number matches its SyscallRule.

Basic ruleset simplifications

A SyscallRule is a predicate over the data contained in the seccomp_data

struct (beyond its nr). A trivial implementation is MatchAll, which simply

matches any seccomp_data. Other implementations include Or and And (which

do what they sound like), and PerArg which applies predicates to each specific

argument of a seccomp_data, and forms the meat of actual syscall filtering

rules. Some basic simplifications are already possible with these building

blocks.

SyscallRule is a predicate over the data contained in the seccomp_data

struct (beyond its nr). A trivial implementation is MatchAll, which simply

matches any seccomp_data. Other implementations include Or and And (which

do what they sound like), and PerArg which applies predicates to each specific

argument of a seccomp_data, and forms the meat of actual syscall filtering

rules. Some basic simplifications are already possible with these building

blocks.gVisor implements the following basic optimizers, which look like they may be useless on their own but end up simplifying the logic of the more complex optimizer described in other sections quite a bit:

OrandAndrules with a single predicate within them are replaced with just that predicate.- Duplicate predicates within

OrandAndrules are removed. Orrules withinOrrules are flattened.Andrules withinAndrules are flattened.- An

Orrule which contains aMatchAllpredicate is replaced withMatchAll. MatchAllpredicates withinAndrules are removed.PerArgrules withMatchAllpredicates for each argument are replaced with a rule that matches anything.

As with the bytecode-level optimizations, gVisor runs these in a loop until the structure of the rules no longer change. With the basic optimizations above, this silly-looking rule:

Or{

Or{

And{

MatchAll,

PerArg{AnyValue, EqualTo(2), AnyValue},

},

MatchAll,

},

PerArg{AnyValue, EqualTo(2), AnyValue},

PerArg{AnyValue, EqualTo(2), AnyValue},

}

… is simplified down to just PerArg{AnyValue, EqualTo(2), AnyValue}.

Extracting repeated argument matchers

This is the main optimization that gVisor performs on rulesets. gVisor looks for

common argument matchers that are repeated across all combinations of other

argument matchers in branches of an Or rule. It removes them from these

PerArg rules, and And the overall syscall rule with a single instance of

that argument matcher. Sound complicated? Let’s look at an example.

Or rule. It removes them from these

PerArg rules, and And the overall syscall rule with a single instance of

that argument matcher. Sound complicated? Let’s look at an example.In the

gVisor Sentry seccomp-bpf configuration,

these are the rules for the

fcntl(2) system call:

rules = ...(map[uintptr]SyscallRule{

SYS_FCNTL: Or{

PerArg{

NonNegativeFD,

EqualTo(F_GETFL),

},

PerArg{

NonNegativeFD,

EqualTo(F_SETFL),

},

PerArg{

NonNegativeFD,

EqualTo(F_GETFD),

},

},

})

… This means that for the fcntl(2) system call, seccomp_data.args[0] may

be any non-negative number, seccomp_data.args[1] may be either F_GETFL,

F_SETFL, or F_GETFD, and all other seccomp_data fields may be any value.

If rendered naively in BPF, this would iterate over each branch of the Or

expression, and re-check the NonNegativeFD each time. Clearly wasteful.

Conceptually, the ideal expression is something like this:

rules = ...(map[uintptr]SyscallRule{

SYS_FCNTL: PerArg{

NonNegativeFD,

AnyOf(F_GETFL, F_SETFL, F_GETFD),

},

})

… But going through all the syscall rules to look for this pattern would be

quite tedious, and some of them are actually Or‘d from multiple

map[uintptr]SyscallRule in different files (e.g. platform-dependent syscalls),

so they cannot be all specified in a single location with a single predicate on

seccomp_data.args[1]. So gVisor needs to detect this programmatically at

optimization time.

Conceptually, gVisor goes from:

Or{

PerArg{A1, B1, C1, D},

PerArg{A2, B1, C1, D},

PerArg{A1, B2, C2, D},

PerArg{A2, B2, C2, D},

PerArg{A1, B3, C3, D},

PerArg{A2, B3, C3, D},

}

… to (after one pass):

And{

Or{

PerArg{A1, AnyValue, AnyValue, AnyValue},

PerArg{A2, AnyValue, AnyValue, AnyValue},

PerArg{A1, AnyValue, AnyValue, AnyValue},

PerArg{A2, AnyValue, AnyValue, AnyValue},

PerArg{A1, AnyValue, AnyValue, AnyValue},

PerArg{A2, AnyValue, AnyValue, AnyValue},

},

Or{

PerArg{AnyValue, B1, C1, D},

PerArg{AnyValue, B1, C1, D},

PerArg{AnyValue, B2, C2, D},

PerArg{AnyValue, B2, C2, D},

PerArg{AnyValue, B3, C3, D},

PerArg{AnyValue, B3, C3, D},

},

}

Then the basic optimizers will kick in and

detect duplicate PerArg rules in Or expressions, and delete them:

And{

Or{

PerArg{A1, AnyValue, AnyValue, AnyValue},

PerArg{A2, AnyValue, AnyValue, AnyValue},

},

Or{

PerArg{AnyValue, B1, C1, D},

PerArg{AnyValue, B2, C2, D},

PerArg{AnyValue, B3, C3, D},

},

}

… Then, on the next pass, the second inner Or rule gets recursively

optimized:

And{

Or{

PerArg{A1, AnyValue, AnyValue, AnyValue},

PerArg{A2, AnyValue, AnyValue, AnyValue},

},

And{

Or{

PerArg{AnyValue, AnyValue, AnyValue, D},

PerArg{AnyValue, AnyValue, AnyValue, D},

PerArg{AnyValue, AnyValue, AnyValue, D},

},

Or{

PerArg{AnyValue, B1, C1, AnyValue},

PerArg{AnyValue, B2, C2, AnyValue},

PerArg{AnyValue, B3, C3, AnyValue},

},

},

}

… which, after other basic optimizers clean this all up, finally becomes:

And{

Or{

PerArg{A1, AnyValue, AnyValue, AnyValue},

PerArg{A2, AnyValue, AnyValue, AnyValue},

},

PerArg{AnyValue, AnyValue, AnyValue, D},

Or{

PerArg{AnyValue, B1, C1, AnyValue},

PerArg{AnyValue, B2, C2, AnyValue},

PerArg{AnyValue, B3, C3, AnyValue},

},

}

This has turned what would be 24 comparisons into just 9:

seccomp_data[0]must either match predicateA1orA2.seccomp_data[3]must match predicateD.- At least one of the following must be true:

seccomp_data[1]must match predicateB1andseccomp_data[2]must match predicateC1.seccomp_data[1]must match predicateB2andseccomp_data[2]must match predicateC2.seccomp_data[1]must match predicateB3andseccomp_data[2]must match predicateC3.

To go back to our fcntl(2) example, the rules would therefore be rewritten to:

rules = ...(map[uintptr]SyscallRule{

SYS_FCNTL: And{

// Check for args[0] exclusively:

PerArg{NonNegativeFD, AnyValue},

// Check for args[1] exclusively:

Or{

PerArg{AnyValue, EqualTo(F_GETFL)},

PerArg{AnyValue, EqualTo(F_SETFL)},

PerArg{AnyValue, EqualTo(F_GETFD)},

},

},

})

… thus we’ve turned 6 comparisons into 4. But we can do better still!

Extracting repeated 32-bit match logic from 64-bit argument matchers

We can apply the same optimization, but down to the 32-bit matching logic that

underlies the 64-bit syscall argument matching predicates.

As you may recall, cBPF instructions are limited to 32-bit math. This means that when rendered, each of these argument comparisons are actually 2 operations each: one for the first 32-bit half of the argument, and one for the second 32-bit half of the argument.

Let’s look at the F_GETFL, F_SETFL, and F_GETFD constants:

F_GETFL = 0x3

F_SETFL = 0x4

F_GETFD = 0x1

The cBPF bytecode for checking the arguments of this syscall may therefore look something like this:

// Check for `seccomp_data.args[0]`:

00: load32 16 // Load the first 32 bits of

// `seccomp_data.args[0]` into register A.

01: jeq 0, 0, @bad // If A == 0, continue, otherwise jump to @bad.

02: load32 20 // Load the second 32 bits of

// `seccomp_data.args[0]` into register A.

03: jset 0x80000000, @bad, 0 // If A & 0x80000000 != 0, jump to @bad,

// otherwise continue.

// Check for `seccomp_data.args[1]`:

04: load32 24 // Load the first 32 bits of

// `seccomp_data.args[1]` into register A.

05: jeq 0, 0, @next1 // If A == 0, continue, otherwise jump to @next1.

06: load32 28 // Load the second 32 bits of

// `seccomp_data.args[1]` into register A.

07: jeq 0x3, @good, @next1 // If A == 0x3, jump to @good,

// otherwise jump to @next1.

@next1:

08: load32 24 // Load the first 32 bits of

// `seccomp_data.args[1]` into register A.

09: jeq 0, 0, @next2 // If A == 0, continue, otherwise jump to @next2.

10: load32 28 // Load the second 32 bits of

// `seccomp_data.args[1]` into register A.

11: jeq 0x4, @good, @next2 // If A == 0x3, jump to @good,

// otherwise jump to @next2.

@next2:

12: load32 24 // Load the first 32 bits of

// `seccomp_data.args[1]` into register A.

13: jeq 0, 0, @bad // If A == 0, continue, otherwise jump to @bad.

14: load32 28 // Load the second 32 bits of

// `seccomp_data.args[1]` into register A.

15: jeq 0x1, @good, @bad // If A == 0x1, jump to @good,

// otherwise jump to @bad.

// Good/bad jump targets for the checks above to jump to:

@good:

16: return ALLOW

@bad:

17: return REJECT

Clearly this could be better. The first 32 bits must be zero in all possible

cases. So the syscall argument value-matching primitives (e.g. EqualTo) may be

split into 2 32-bit value matchers:

rules = ...(map[uintptr]SyscallRule{

SYS_FCNTL: And{

PerArg{NonNegativeFD, AnyValue},

Or{

PerArg{

AnyValue,

splitMatcher{

high32bits: EqualTo32Bits(

F_GETFL & 0xffffffff00000000 /* = 0 */),

low32bits: EqualTo32Bits(

F_GETFL & 0x00000000ffffffff /* = 0x3 */),

},

},

PerArg{

AnyValue,

splitMatcher{

high32bits: EqualTo32Bits(

F_SETFL & 0xffffffff00000000 /* = 0 */),

low32bits: EqualTo32Bits(

F_SETFL & 0x00000000ffffffff /* = 0x4 */),

},

},

PerArg{

AnyValue,

splitMatcher{

high32bits: EqualTo32Bits(

F_GETFD & 0xffffffff00000000 /* = 0 */),

low32bits: EqualTo32Bits(

F_GETFD & 0x00000000ffffffff /* = 0x1 */),

},

},

},

},

})

gVisor then applies the same optimization as earlier, but this time going into

each 32-bit half of each argument. This means it can extract the

EqualTo32Bits(0) matcher from the high32bits part of each splitMatcher and

move it up to the And expression like so:

rules = ...(map[uintptr]SyscallRule{

SYS_FCNTL: And{

PerArg{NonNegativeFD, AnyValue},

PerArg{

AnyValue,

splitMatcher{

high32bits: EqualTo32Bits(0),

low32bits: Any32BitsValue,

},

},

Or{

PerArg{

AnyValue,

splitMatcher{

high32bits: Any32BitsValue,

low32bits: EqualTo32Bits(

F_GETFL & 0x00000000ffffffff /* = 0x3 */),

},

},

PerArg{

AnyValue,

splitMatcher{

high32bits: Any32BitsValue,

low32bits: EqualTo32Bits(

F_SETFL & 0x00000000ffffffff /* = 0x4 */),

},

},

PerArg{

AnyValue,

splitMatcher{

high32bits: Any32BitsValue,

low32bits: EqualTo32Bits(

F_GETFD & 0x00000000ffffffff /* = 0x1 */),

},

},

},

},

})

This looks bigger as a tree, but keep in mind that the AnyValue and

Any32BitsValue matchers do not produce any bytecode. So now let’s render that

tree to bytecode:

// Check for `seccomp_data.args[0]`:

00: load32 16 // Load the first 32 bits of

// `seccomp_data.args[0]` into register A.

01: jeq 0, 0, @bad // If A == 0, continue, otherwise jump to @bad.

02: load32 20 // Load the second 32 bits of

// `seccomp_data.args[0]` into register A.

03: jset 0x80000000, @bad, 0 // If A & 0x80000000 != 0, jump to @bad,

// otherwise continue.

// Check for `seccomp_data.args[1]`:

04: load32 24 // Load the first 32 bits of

// `seccomp_data.args[1]` into register A.

05: jeq 0, 0, @bad // If A == 0, continue, otherwise jump to @bad.

06: load32 28 // Load the second 32 bits of

// `seccomp_data.args[1]` into register A.

07: jeq 0x3, @good, @next1 // If A == 0x3, jump to @good,

// otherwise jump to @next1.

@next1:

08: load32 28 // Load the second 32 bits of

// `seccomp_data.args[1]` into register A.

09: jeq 0x4, @good, @next2 // If A == 0x3, jump to @good,

// otherwise jump to @next2.

@next2:

10: load32 28 // Load the second 32 bits of

// `seccomp_data.args[1]` into register A.

11: jeq 0x1, @good, @bad // If A == 0x1, jump to @good,

// otherwise jump to @bad.

// Good/bad jump targets for the checks above to jump to:

@good:

12: return ALLOW

@bad:

13: return REJECT

This is where the bytecode-level optimization to remove redundant loads

described earlier finally becomes relevant. We don’t need to

load the second 32 bits of seccomp_data.args[1] multiple times in a row:

// Check for `seccomp_data.args[0]`:

00: load32 16 // Load the first 32 bits of

// `seccomp_data.args[0]` into register A.

01: jeq 0, 0, @bad // If A == 0, continue, otherwise jump to @bad.

02: load32 20 // Load the second 32 bits of

// `seccomp_data.args[0]` into register A.

03: jset 0x80000000, @bad, 0 // If A & 0x80000000 != 0, jump to @bad,

// otherwise continue.

// Check for `seccomp_data.args[1]`:

04: load32 24 // Load the first 32 bits of

// `seccomp_data.args[1]` into register A.

05: jeq 0, 0, @bad // If A == 0, continue, otherwise jump to @bad.

06: load32 28 // Load the second 32 bits of

// `seccomp_data.args[1]` into register A.

07: jeq 0x3, @good, @next1 // If A == 0x3, jump to @good,

// otherwise jump to @next1.

@next1:

08: jeq 0x4, @good, @next2 // If A == 0x3, jump to @good,

// otherwise jump to @next2.

@next2:

09: jeq 0x1, @good, @bad // If A == 0x1, jump to @good,

// otherwise jump to @bad.

// Good/bad jump targets for the checks above to jump to:

@good:

10: return ALLOW

@bad:

11: return REJECT

Of course, in practice the @good/@bad jump targets would also be unified

with rules from other system call filters in order to cut down on those too. And

by having reduced the number of instructions in each individual filtering rule,

the jumps to these targets can be deduplicated against that many more rules.

This example demonstrates how optimizations build on top of each other, making each optimization more likely to make other optimizations useful in turn.

Other optimizations

Beyond these, gVisor also has the following minor optimizations.

Making futex(2) rules faster

futex(2) is by far the

most-often-called system call that gVisor calls as part of its operation. It is

used for synchronization, so it needs to be very efficient.

futex(2) rules fasterfutex(2) is by far the

most-often-called system call that gVisor calls as part of its operation. It is

used for synchronization, so it needs to be very efficient.Its rules used to look like this:

SYS_FUTEX: Or{

PerArg{

AnyValue,

EqualTo(FUTEX_WAIT | FUTEX_PRIVATE_FLAG),

},

PerArg{

AnyValue,

EqualTo(FUTEX_WAKE | FUTEX_PRIVATE_FLAG),

},

PerArg{

AnyValue,

EqualTo(FUTEX_WAIT),

},

PerArg{

AnyValue,

EqualTo(FUTEX_WAKE),

},

},

Essentially a 4-way Or between 4 different values allowed for

seccomp_data.args[1]. This is all well and good, and the above optimizations

already optimize this down to the minimum amount of jeq comparison operations.

But looking at the actual bit values of the FUTEX_* constants above:

FUTEX_WAIT = 0x00

FUTEX_WAKE = 0x01

FUTEX_PRIVATE_FLAG = 0x80

… We can see that this is equivalent to checking that no bits other than

0x01 and 0x80 may be set. Turns out that cBPF has an instruction for that.

This is now optimized down to two comparison operations:

01: load32 24 // Load the first 32 bits of

// `seccomp_data.args[1]` into register A.

02: jeq 0, 0, @bad // If A == 0, continue,

// otherwise jump to @bad.

03: load32 28 // Load the second 32 bits of

// `seccomp_data.args[1]` into register A.

04: jset 0xffffff7e, @bad, @good // If A & ^(0x01 | 0x80) != 0, jump to @bad,

// otherwise jump to @good.

Optimizing non-negative FD checks

A lot of syscall arguments are file descriptors (FD numbers), which we need to

filter efficiently.

An FD is a 32-bit positive integer, but is passed as a 64-bit value as all syscall arguments are. Instead of doing a “less than” operation, we can simply turn it into a bitwise check. We simply check that the first half of the 64-bit value is zero, and that the 31st bit of the second half of the 64-bit value is not set.

Enforcing consistency of argument-wise matchers

When one syscall argument is checked consistently across all branches of an

Or, enforcing that this is the case ensures that the

optimization for such matchers remains effective.

Or, enforcing that this is the case ensures that the

optimization for such matchers remains effective.The ioctl(2) system call takes an FD as one of its arguments. Since it is a

“grab bag” of a system call, gVisor’s rules for ioctl(2) were similarly spread

across many files and rules, and not all of them checked that the FD argument

was non-negative; some of them simply accepted any value for the FD argument.

Before this optimization work, this meant that the BPF program did less work for

the rules which didn’t check the value of the FD argument. However, now that

gVisor optimizes repeated argument-wise matchers, it is

now actually cheaper if all ioctl(2) rules verify the value of the FD

argument consistently, as that argument check can be performed exactly once for

all possible branches of the ioctl(2) rules. So now gVisor has a test that

verifies that this is the case. This is a good example that shows that

optimization work can lead to improved security due to the efficiency gains

that comes from applying security checks consistently.

secbench: Benchmarking seccomp-bpf programs

To measure the effectiveness of the above improvements, measuring gVisor performance itself would be very difficult, because each improvement is a rather tiny part of the syscall hot path. At the scale of each of these optimizations, we need to zoom in a bit more.

So now gVisor has

tooling for benchmarking seccomp-bpf programs.

It works by taking a

cBPF program along with several possible syscalls

to try with it. It runs a subprocess that installs this program as seccomp-bpf

filter for itself, replacing all actions (other than “approve syscall”) with

“return error” in order to avoid crashing. Then it measures the latency of each

syscall. This is then measured against the latency of the very same syscalls in

a subprocess that has an empty seccomp-bpf (i.e. the only instruction within

it is return ALLOW).

Let’s measure the effect of the above improvements on a gVisor-like workload.

Modeling gVisor seccomp-bpf behavior for benchmarking

This can be done by running gVisor under ptrace to see what system calls the

gVisor process is doing.

seccomp-bpf behavior for benchmarkingptrace to see what system calls the

gVisor process is doing.Note that ptrace here refers to the mechanism by which we can inspect the

system call that the gVisor Sentry is making. This is distinct from the system

calls the sandboxed application is doing. It has also nothing to do with

gVisor’s former “ptrace” platform.

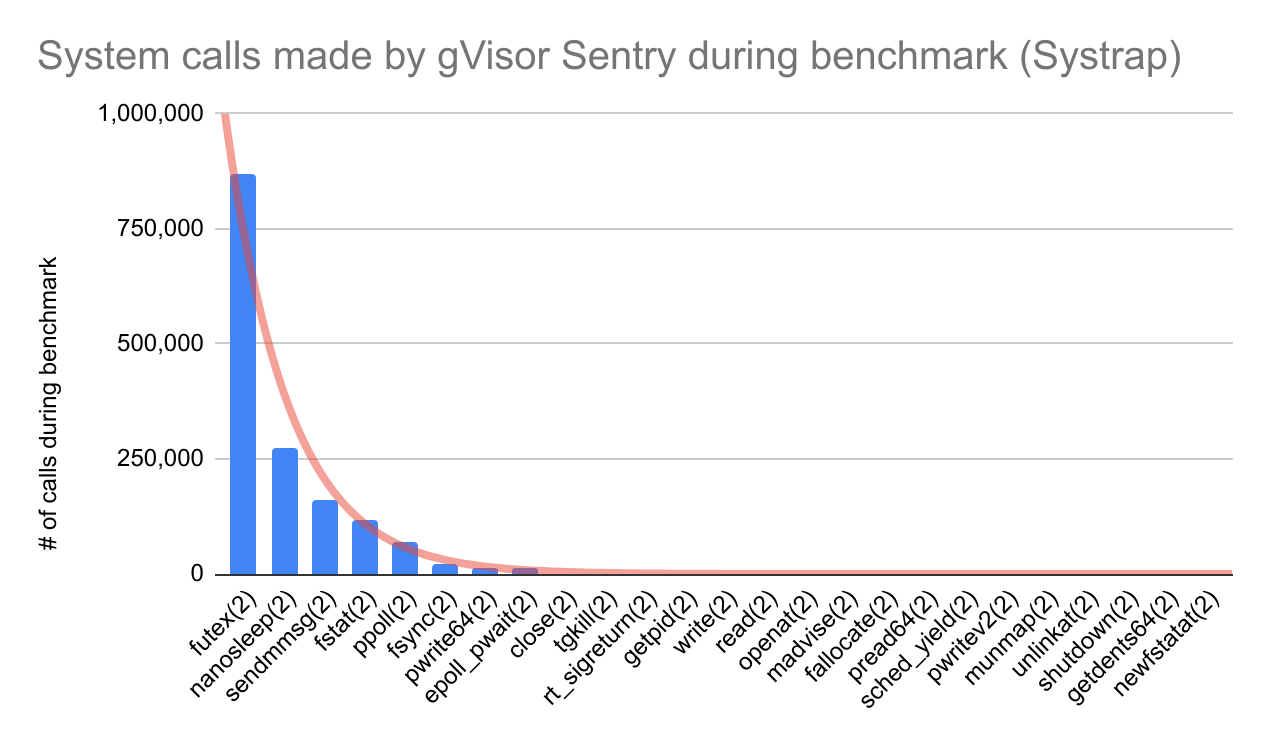

For example, after running a Postgres benchmark inside gVisor with Systrap, the

ptrace tool generated the following summary table:

% time seconds usecs/call calls errors syscall

------ ----------- ----------- --------- --------- ----------------

62.10 431.799048 496 870063 46227 futex

4.23 29.399526 106 275649 38 nanosleep

0.87 6.032292 37 160201 sendmmsg

0.28 1.939492 16 115769 fstat

27.96 194.415343 2787 69749 137 ppoll

1.05 7.298717 315 23131 fsync

0.06 0.446930 31 14096 pwrite64

3.37 23.398106 1907 12266 9 epoll_pwait

0.00 0.019711 9 1991 6 close

0.02 0.116739 82 1414 tgkill

0.01 0.068481 48 1414 201 rt_sigreturn

0.02 0.147048 104 1413 getpid

0.01 0.045338 41 1080 write

0.01 0.039876 37 1056 read

0.00 0.015637 18 836 24 openat

0.01 0.066699 81 814 madvise

0.00 0.029757 111 267 fallocate

0.00 0.006619 15 420 pread64

0.00 0.013334 35 375 sched_yield

0.00 0.008112 114 71 pwritev2

0.00 0.003005 57 52 munmap

0.00 0.000343 18 19 6 unlinkat

0.00 0.000249 15 16 shutdown

0.00 0.000100 8 12 getdents64

0.00 0.000045 4 10 newfstatat

...

------ ----------- ----------- --------- --------- ----------------

100.00 695.311111 447 1552214 46651 total

To mimic the syscall profile of this gVisor sandbox from the perspective of

seccomp-bpf overhead, we need to have it call these system calls with the same

relative frequency. Therefore, the dimension that matters here isn’t time or

seconds or even usecs/call; it is actually just the number of system calls

(calls). In graph form:

The Pareto distribution of system calls becomes immediately clear.

seccomp-bpf filtering overhead reduction

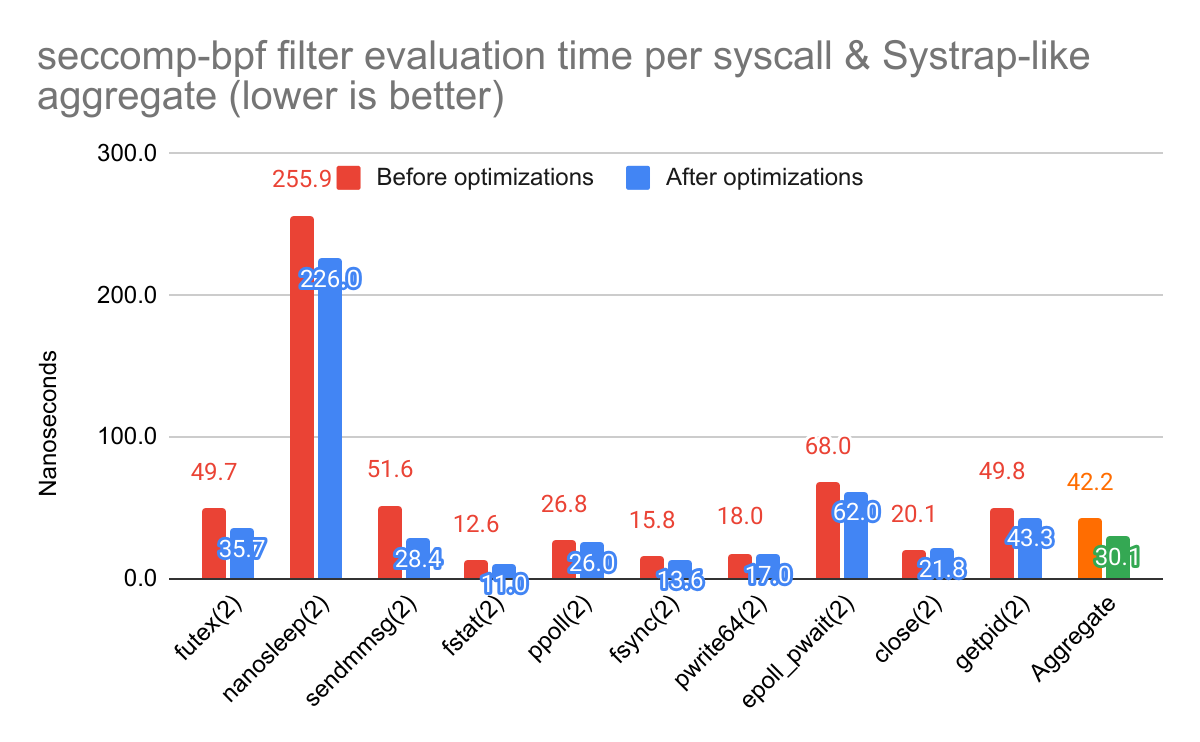

The secbench library lets us take the top 10 system calls and measure their

seccomp-bpf filtering overhead individually, as well as building a weighted

aggregate of their overall overhead. Here are the numbers from before and after

the filtering optimizations described in this post:

The nanosleep(2) system call is a bit of an oddball here. Unlike the others,

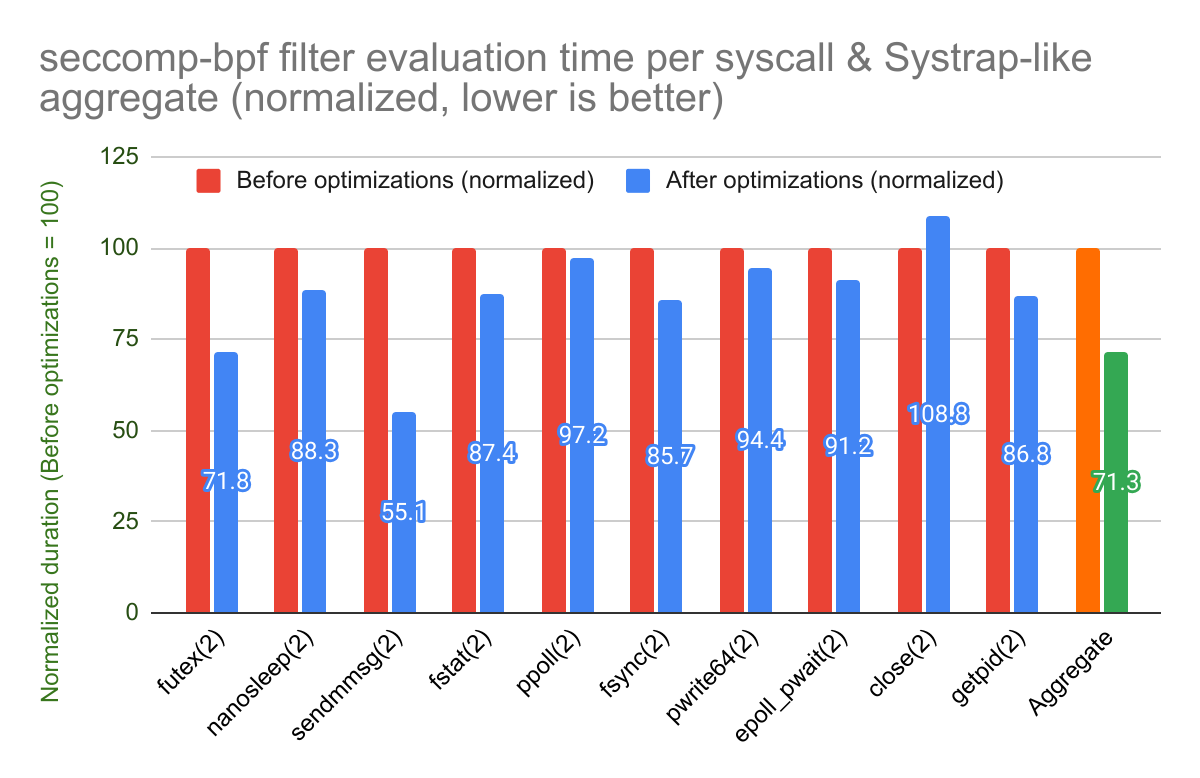

this system call causes the current thread to be descheduled. To make the

results more legible, here is the same data with the duration normalized to the

seccomp-bpf filtering overhead from before optimizations:

This shows that most system calls have had their filtering overhead reduced, but

others haven’t significantly changed (10% or less change in either direction).

This is to be expected: those that have not changed are the ones that are

cacheable: nanosleep(2), fstat(2), ppoll(2), fsync(2), pwrite64(2),

close(2), getpid(2). The non-cacheable syscalls

which have dedicated checks before the main BST, futex(2) and

sendmmsg(2), experienced the biggest boost. Lastly, epoll_pwait(2) is

non-cacheable but doesn’t have a dedicated check before the main BST, so while

it still sees a small performance gain, that gain is lower than its

counterparts.

The “Aggregate” number comes from the secbench library and represents the

total time difference spent in system calls after calling them using weighted

randomness. It represents the average system call overhead that a Sentry using

Systrap would incur. Therefore, per these numbers, these optimizations removed

~29% from gVisor’s overall seccomp-bpf filtering overhead.

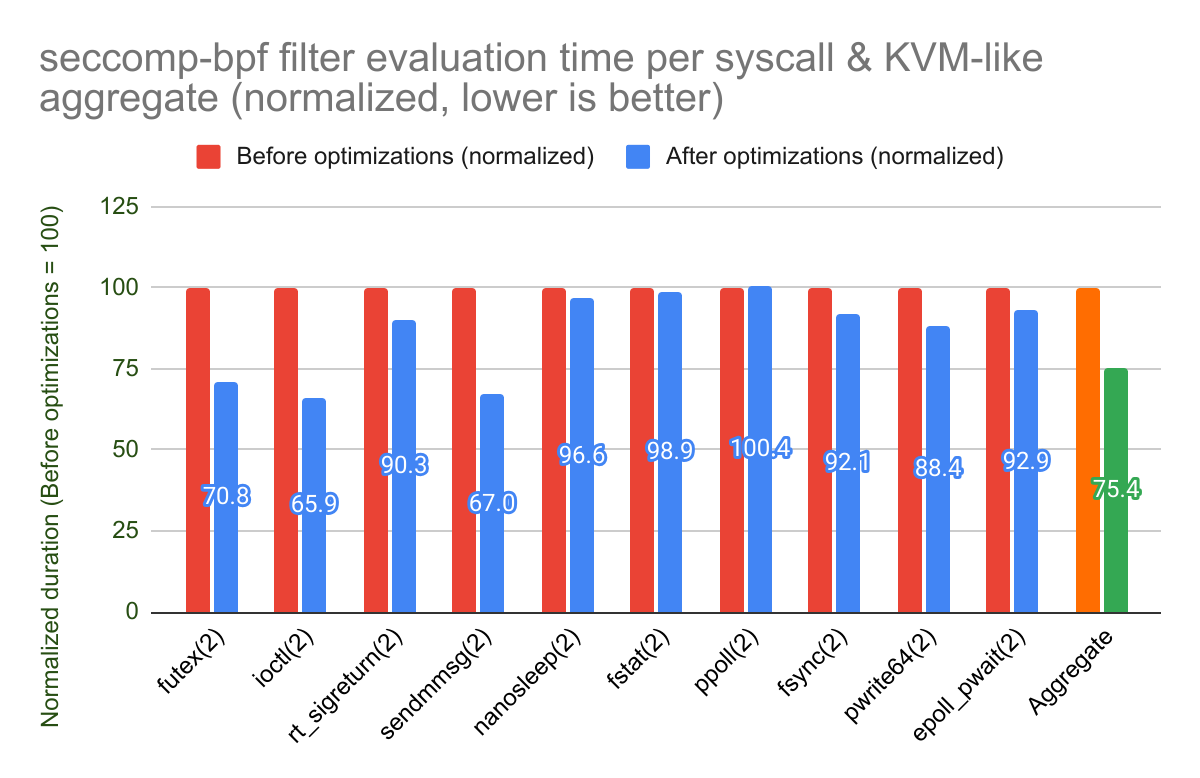

Here is the same data for KVM, which has a slightly different syscall profile

with ioctl(2) and rt_sigreturn(2) being critical for performance:

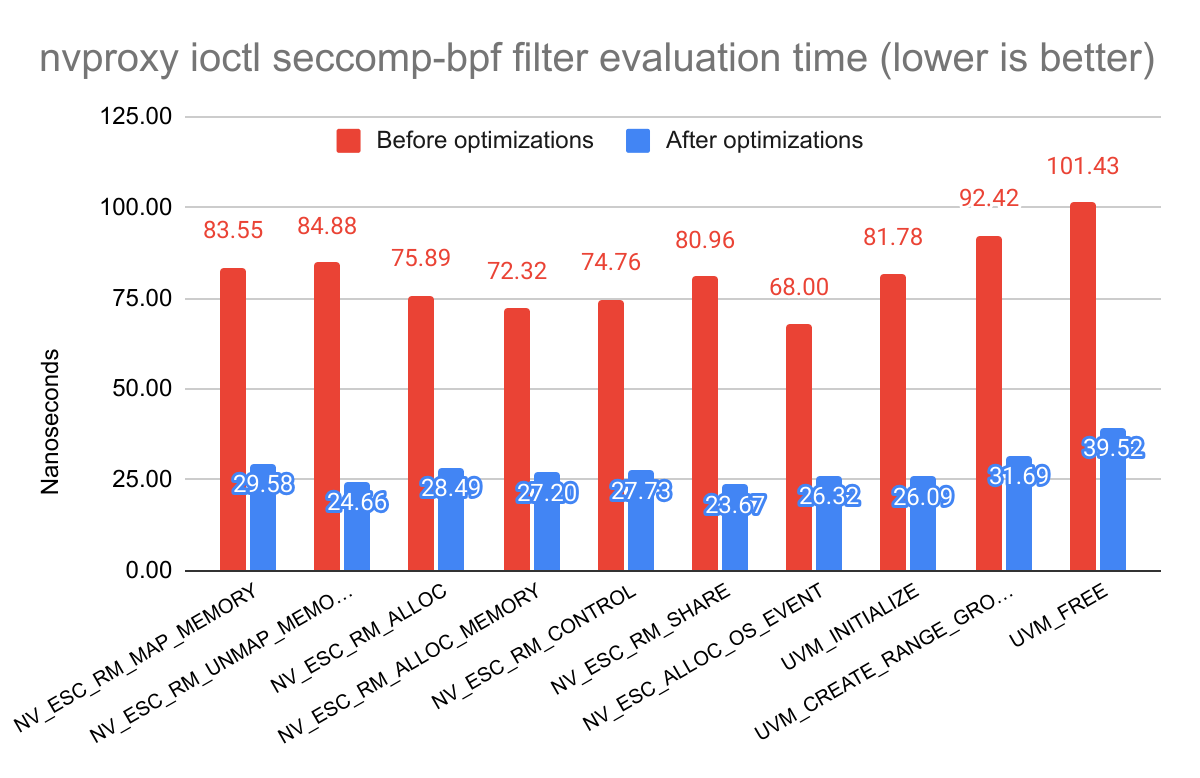

Lastly, let’s look at GPU workload performance. This benchmark enables gVisor’s

experimental nvproxy feature for GPU support.

What matters for this workload is ioctl(2) performance, as this is the system

call used to issue commands to the GPU. Here is the seccomp-bpf filtering

overhead of various CUDA control commands issued via ioctl(2):

As nvproxy adds a lot of complexity to the ioctl(2) filtering rules, this is

where we see the most improvement from these optimizations.

secfuzz: Fuzzing seccomp-bpf programs

To ensure that the optimizations above don’t accidentally end up producing a

cBPF program that has different behavior from the unoptimized one used to do,

gVisor also has

seccomp-bpf fuzz tests.

Because gVisor knows which high-level filters went into constructing the

seccomp-bpf program, it also

automatically generates test cases

from these filters, and the fuzzer verifies that each line and every branch of

the optimized cBPF bytecode is executed, and that the result is the same as

giving the same input to the unoptimized program.

(Line or branch coverage of the unoptimized program is not enforceable, because without optimizations, the bytecode contains many redundant checks for which later branches can never be reached.)

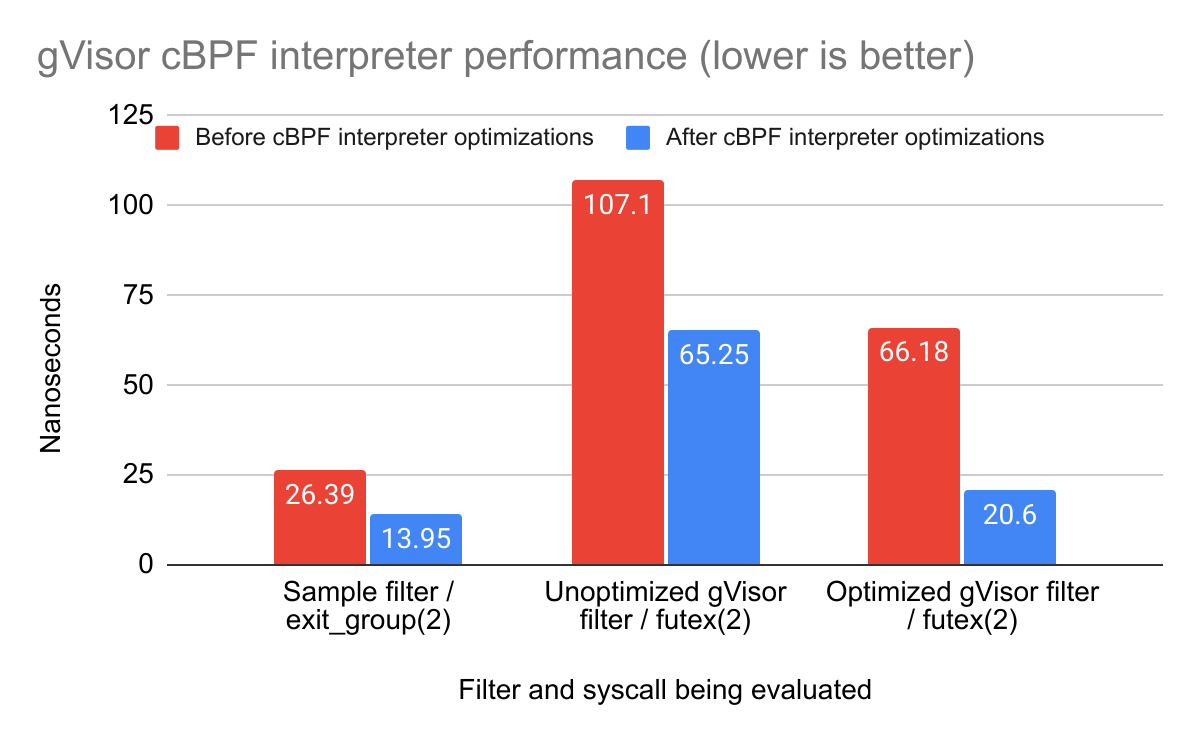

Optimizing in-gVisor seccomp-bpf filtering

gVisor supports sandboxed applications adding seccomp-bpf filters onto

themselves, and

implements its own cBPF interpreter

for this purpose.

Because the cBPF bytecode-level optimizations are lossless and are generally applicable to any cBPF program, they are applied onto programs uploaded by sandboxed applications to make filter evaluation faster in gVisor itself.

Additionally, gVisor removed the use of Go interfaces previously used for

loading data from the BPF “input” (i.e. the seccomp_data struct for

seccomp-bpf). This used to require an endianness-specific interface due to how

the BPF interpreter was used in two places in gVisor: network processing (which

uses network byte ordering), and seccomp-bpf (which uses native byte

ordering). This interface has now been replaced with

Go templates, yielding to a 2x speedup

on the reference simplistic seccomp-bpf filter. The more

load instructions are in the filter, the better the effect. (Naturally, this

also benefits network filtering performance!)

gVisor cBPF interpreter performance

The graph below shows the gVisor cBPF interpreter’s performance against three

sample filters: the reference simplistic seccomp-bpf filter,

and optimized vs unoptimized versions of gVisor’s own syscall filter (to

represent a more complex filter).

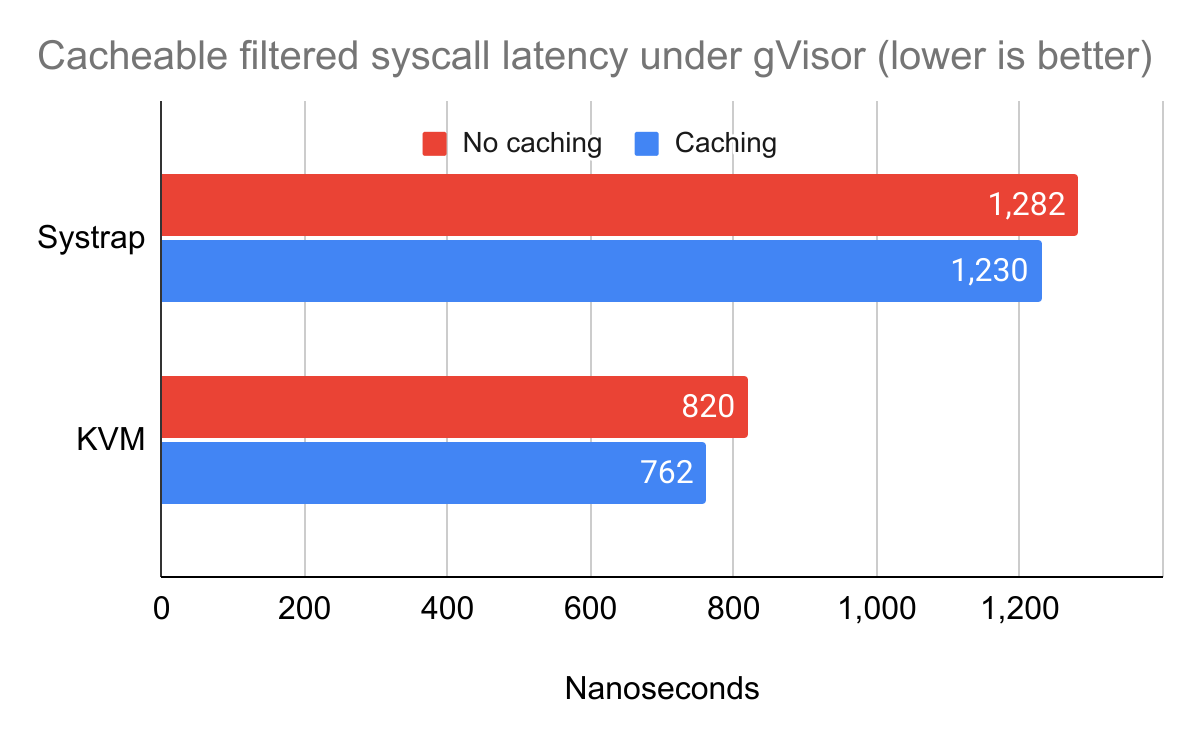

seccomp-bpf filter result caching for sandboxed applications

Lastly, gVisor now also implements an in-sandbox caching mechanism for syscalls

which do not depend on the instruction_pointer or syscall arguments. Unlike

Linux’s seccomp-bpf cache, gVisor’s implementation also handles actions other

than “allow”, and supports the entire set of cBPF instructions rather than the

restricted emulator Linux uses for caching evaluation purposes. This removes the

interpreter from the syscall hot path entirely for cacheable syscalls, further

speeding up system calls from applications that use seccomp-bpf within gVisor.

Faster gVisor startup via filter precompilation

Due to these optimizations, the overall process of building the syscall filtering rules, rendering them to cBPF bytecode, and running all the optimizations, can take quite a while (~10ms). As one of gVisor’s strengths is its startup latency being much faster than VMs, this is an unacceptable delay.

Therefore, gVisor now

precompiles the rules

to optimized cBPF bytecode for most possible gVisor configurations. This means

the runsc binary contains cBPF bytecode embedded in it for some subset of

popular configurations, and it will use this bytecode rather than compiling the

cBPF program from scratch during startup. If runsc is invoked with a

configuration for which the cBPF bytecode isn’t embedded in the runsc binary,

it will fall back to compiling the program from scratch.

Dealing with dynamic values in precompiled rules

One challenge with this approach is to support parts of the configuration that

are only known at runsc startup time. For example, many filters act on a

specific file descriptor used for interacting with the runsc process after

startup over a Unix Domain Socket (called the “controller FD”). This is an

integer that is only known at runtime, so its value cannot be embedded inside

the optimized cBPF bytecode prepared at runsc compilation time.

To address this, the seccomp-bpf precompilation tooling actually supports the

notions of 32-bit “variables”, and takes as input a function to render cBPF

bytecode for a given key-value mapping of variables to placeholder 32-bit

values. The precompiler calls this function twice with different arbitrary

value mappings for each variable, and observes where these arbitrary values show

up in the generated cBPF bytecode. This takes advantage of the fact that

gVisor’s seccomp-bpf program generation is deterministic.

If the two cBPF programs are of the same byte length, and the placeholder values

show up at exactly the same byte offsets within the cBPF bytecode both times,

and the rest of the cBPF bytecode is byte-for-byte equivalent, the precompiler

has very high confidence that these offsets are where the 32-bit variables are

represented in the cBPF bytecode. It then stores these offsets as part of the

embedded data inside the runsc binary. Finally, at runsc execution time, the

bytes at these offsets are replaced with the now-known values of the variables.

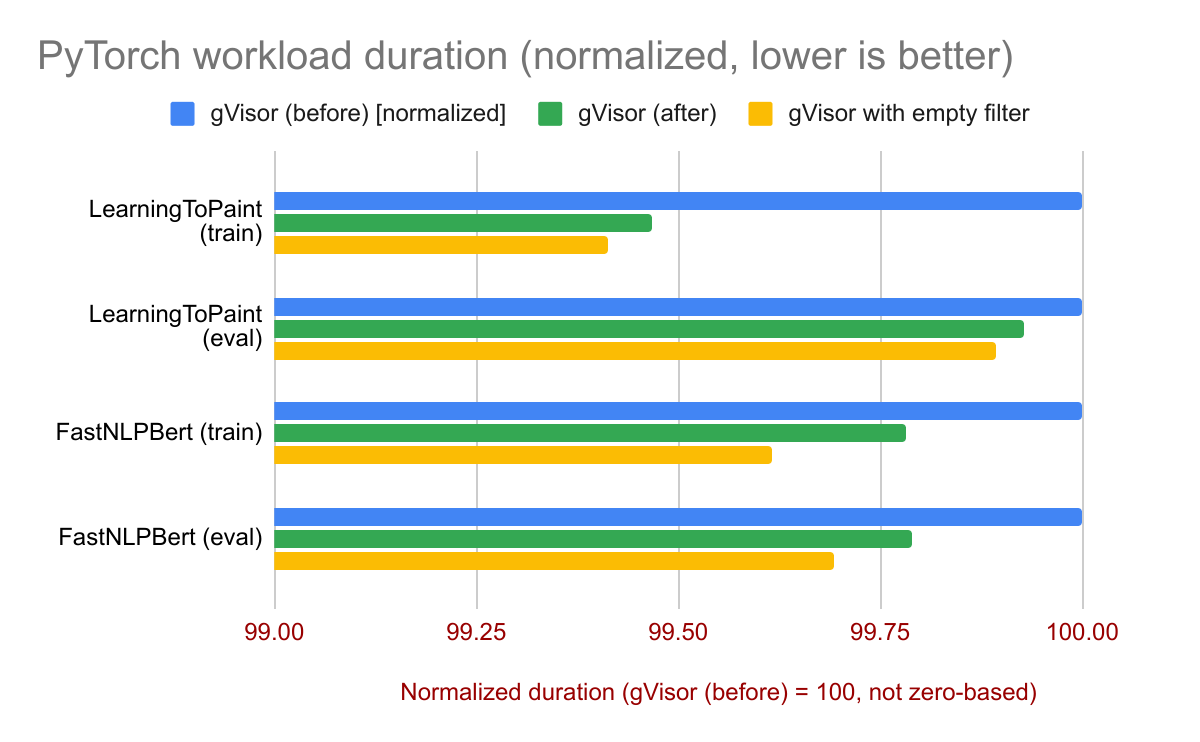

OK that’s great and all, but is gVisor actually faster?

The short answer is: yes, but only slightly. As we

established earlier, seccomp-bpf is only a

small portion of gVisor’s total overhead, and the secbench benchmark shows

that this work only removes a portion of that overhead, so we should not expect

large differences here.

Let’s come back to the trusty ABSL build benchmark, with a new build of gVisor with all of these optimizations turned on:

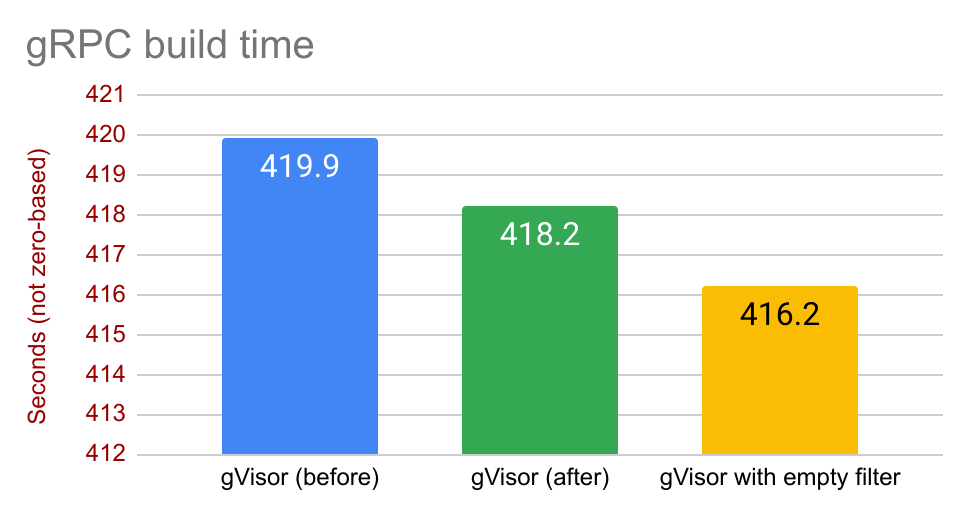

Let’s zoom the vertical axis in on the gVisor variants to see the difference better:

This is about in line with what the earlier benchmarks showed. The initial

benchmarks showed that seccomp-bpf filtering overhead for this benchmark was

on the order of ~3.6% of total runtime, and the secbench benchmarks showed

that the optimizations reduced seccomp-bpf filter evaluation time by ~29% in

aggregate. The final absolute reduction in total runtime should then be around

~1%, which is just about what this result shows.

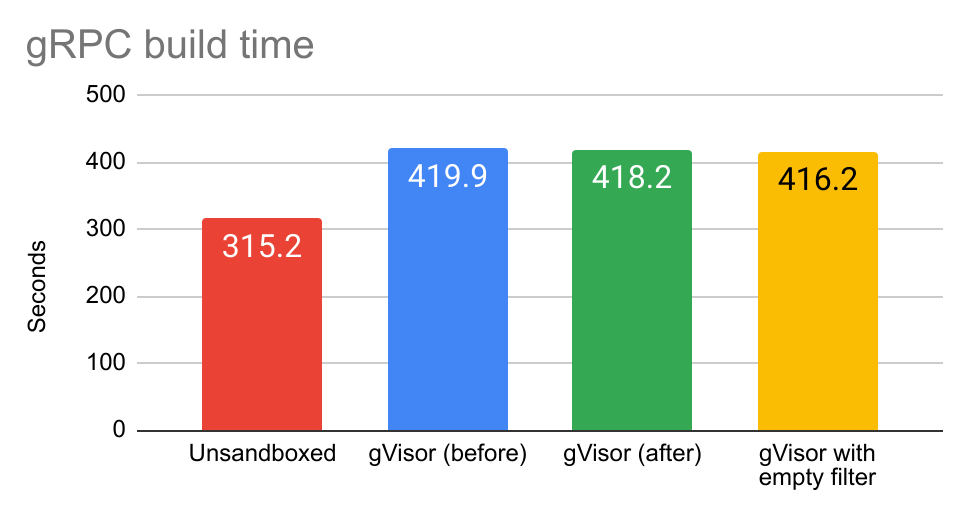

Other benchmarks show a similar pattern. Here’s gRPC build, similar to ABSL:

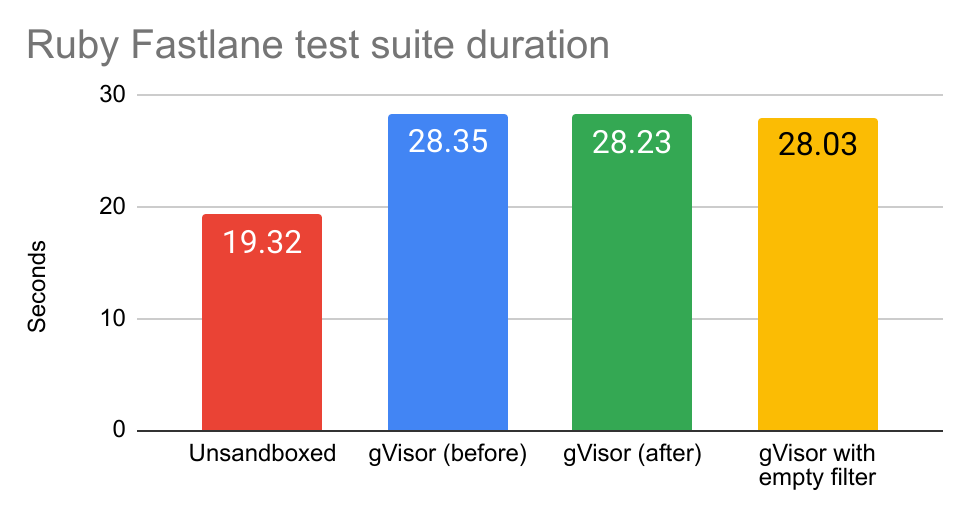

Here’s a benchmark running the Ruby Fastlane test suite:

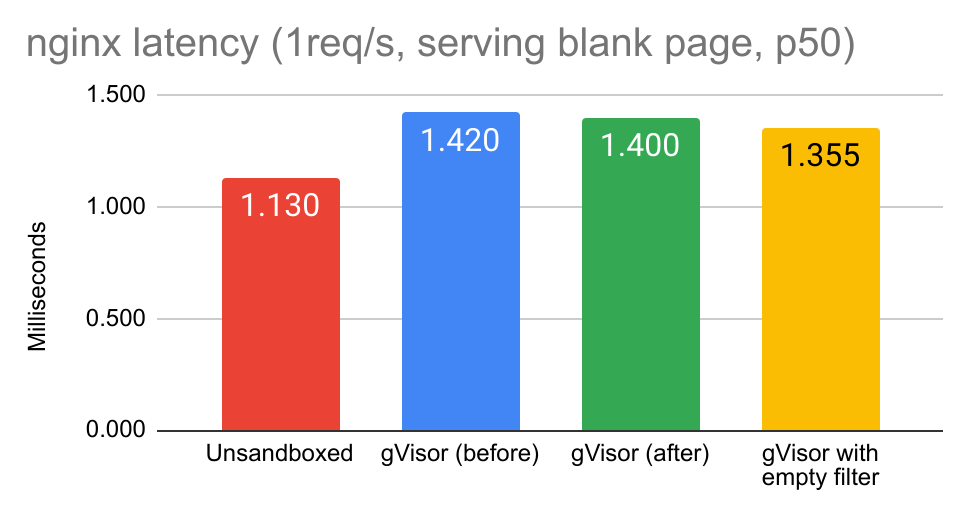

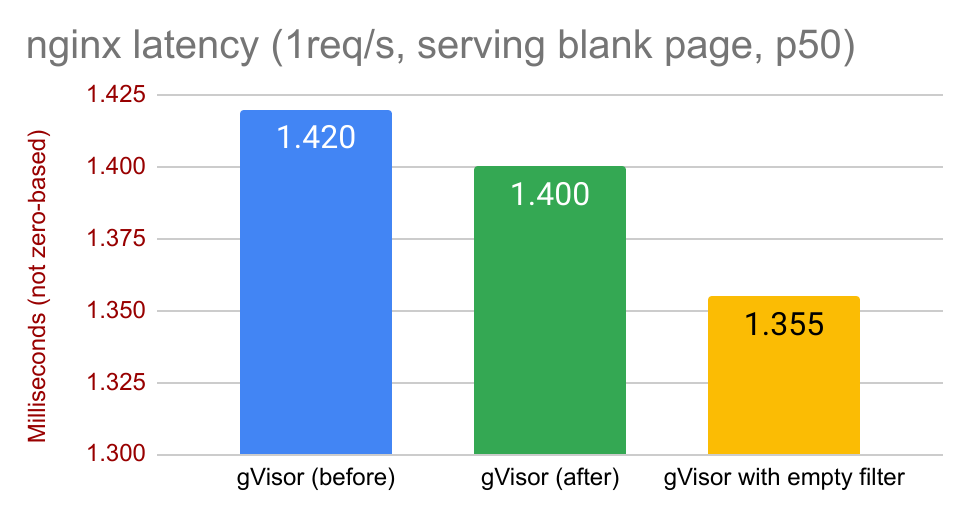

Here’s the 50th percentile of nginx serving latency for an empty webpage. Every microsecond counts when it comes to web serving, and here we’ve shaven off 20 of them.

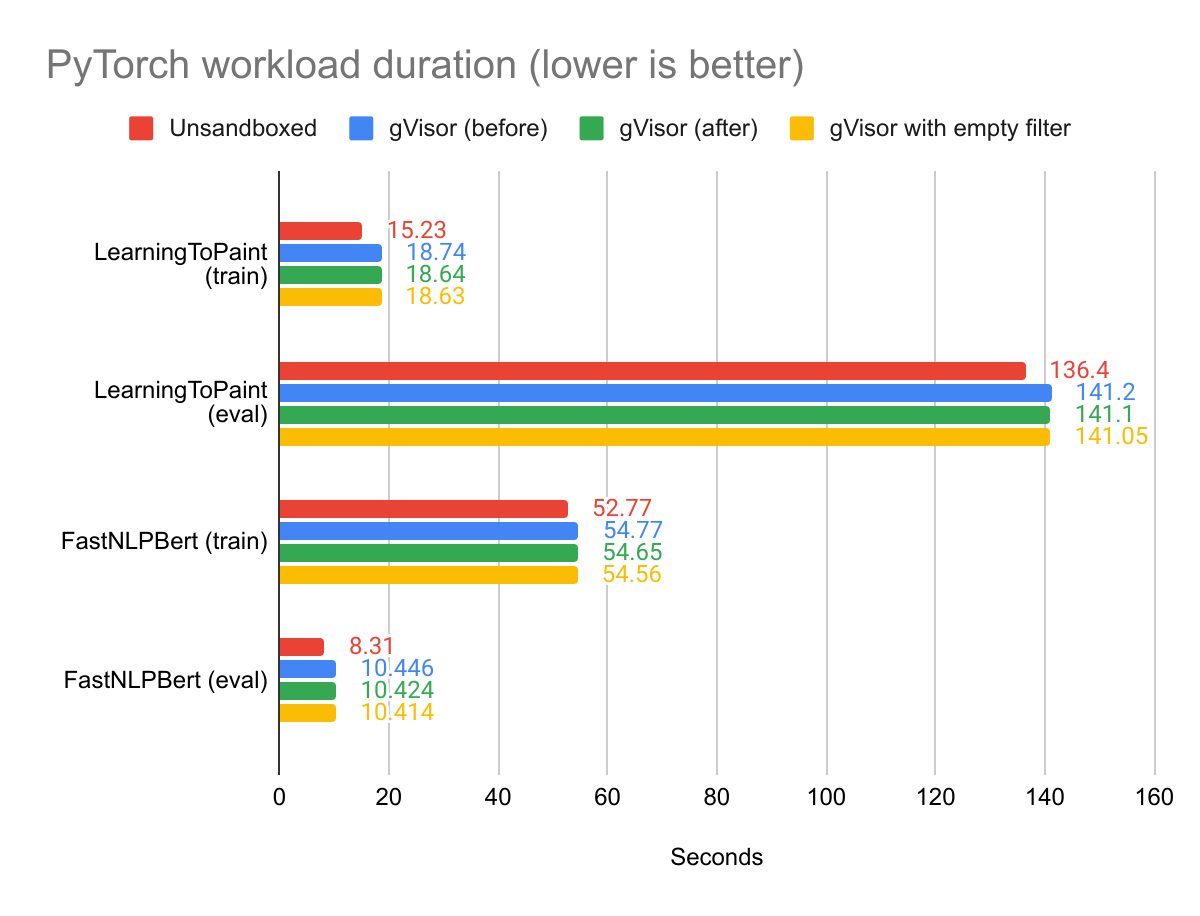

CUDA workloads also get a boost from this work. Since their gVisor-related

overhead is already relatively small, seccomp-bpf filtering makes up a

higher proportion of their overhead. Additionally, as the performance

improvements described in this post disproportionately help the ioctl(2)

system call, this cuts a larger portion of the seccomp-bpf filtering overhead

of these workload, since CUDA uses the ioctl(2) system call to communicate

with the GPU.

While some of these results may not seem like much in absolute terms, it’s important to remember:

- These improvements have resulted in gVisor being able to enforce more

seccomp-bpffilters than it previously could; gVisor’sseccomp-bpffilter was nearly half the maximumseccomp-bpfprogram size, so it could at most double in complexity. After optimizations, it is reduced to less than a fourth of this size. - These improvements allow the gVisor filters to scale better. This is

visible from the effects on

ioctl(2)performance withnvproxyenabled. - The resulting work has produced useful libraries for

seccomp-bpftooling which may be helpful for other projects: testing, fuzzing, and benchmarkingseccomp-bpffilters. - This overhead could not have been addressed in another way. Unlike other

areas of gVisor, such as network overhead or file I/O, overhead from the

host kernel evaluating

seccomp-bpffilter lives outside of gVisor itself and therefore it can only be improved upon by this type of work.

Further work

One potential source of work is to look into the performance gap between no

seccomp-bpf filter at all versus performance with an empty seccomp-bpf

filter (equivalent to an all-cacheable filter). This points to a potential

inefficiency in the Linux kernel implementation of the seccomp-bpf cache.

Another potential point of improvement is to port over the optimizations that

went into searching for a syscall number into the

ioctl(2) system call. ioctl(2) is a “grab-bag” kind of system call,

used by many drivers and other subsets of the Linux kernel to extend the syscall

interface without using up valuable syscall numbers. For example, the

KVM subsystem is

almost entirely controlled through ioctl(2) system calls issued against

/dev/kvm or against per-VM file descriptors.

For this reason, the first non-file-descriptor argument of ioctl(2)

(“request”) usually encodes something analogous to what the syscall number

usually represents: the type of request made to the kernel. Currently, gVisor

performs a linear scan through all possible enumerations of this argument. This

is usually fine, but with features like nvproxy which massively expand this

list of possible values, this can take a long time. ioctl performance is also

critical for gVisor’s KVM platform. A binary search tree would make sense here.

gVisor welcomes further contributions to its seccomp-bpf machinery. Thanks for

reading!

-

cBPF does not have a canonical assembly-style representation. The assembly-like code in this blog post is close to the one used in

bpfcbut diverges in ways to make it hopefully clearer as to what’s happening, and all code is annotated with// comments. ↩